IBM and Google see themselves on track to build fully scaled quantum computers by the end of the decade.

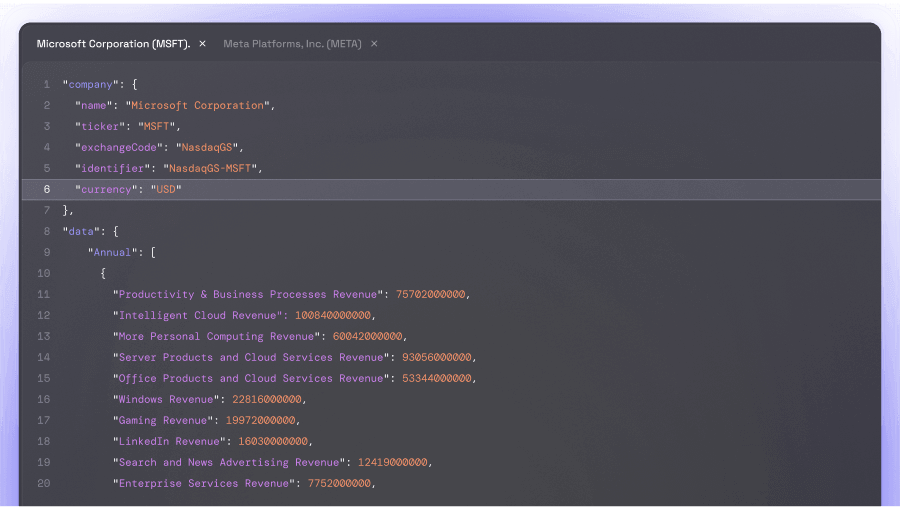

In June, IBM presented a revised architecture blueprint that relies on a new coupling technology to reduce interference between qubits—a problem that had occurred in the 433-qubit chip "Condor." The company is pursuing a low-density parity-check approach in error correction, which requires 90% fewer qubits than Google's "surface code." Google, in turn, is the only company that has so far demonstrated that its method works when scaling up, but sees IBM's approach as more technically risky.

Scaling requires not only stable qubits, but also radical reductions in complexity. Fine-tuning each component is not feasible in large systems, which is why manufacturers are developing more robust components and more efficient manufacturing processes. Google aims to reduce the cost per machine to around 1 billion USD – among other things, by a tenfold increase in cost efficiency for components.

But even beyond physics, the effort remains enormous: systems must be operated in extremely deep-cooled special refrigerators, distributed on modular chips, and implemented with less cabling. Competing technologies such as ion traps or photonic qubits offer stability advantages, but struggle with lower computing speed and more difficult networking.

For market experts like Gartner analyst Mark Horvath, it's clear: The latest IBM designs could work but so far exist only on paper. Which technology will prevail is likely to depend on government support – such as from DARPA, which is already examining which providers can scale the fastest to practical viability.