Takeaways NEW

- 90% of developers use AI tools daily, but only 24% trust the results.

- The DORA AI Capabilities Model by Google offers strategies for secure AI integration.

Artificial intelligence is being discovered by software developers with the enthusiasm you'd expect from children in a candy store. However, the confidence in the results of these tools is comparable to the belief in the promises of politicians.

According to the recently published DORA report by Google Cloud, 90% of developers now use AI tools daily in their work, an increase of 14% compared to the previous year. However, only 24% of respondents trust the information provided by these tools.

The report, based on a survey of nearly 5,000 technology experts worldwide, highlights an industry striving to move quickly without breaking things. Developers spend an average of two hours daily with AI assistants, from code generation to security checks. Nevertheless, 30% of respondents say they trust the AI results only "a little" or "not at all."

According to Ryan Salva of Google, who is responsible for the Gemini Code Assist tools, the use of AI in the daily work of a Google engineer is unavoidable. Sundar Pichai, CEO of Google, noted that more than a quarter of the company's new code is generated by AI systems, increasing the productivity of engineering teams by 10%.

Developers primarily use AI for code creation and modification. Other areas of application include troubleshooting, review and maintenance of legacy systems, and educational purposes such as explaining concepts or creating documentation.

Despite the lack of confidence, over 80% of the developers surveyed report that AI increases their work efficiency, while 59% notice improvements in code quality. Interestingly, 65% of respondents describe themselves as heavily dependent on these tools, even though they do not fully trust them.

The discrepancy between trust and productivity is also reflected in the Stack Overflow 2025 survey, which shows distrust in the accuracy of AI rising from 31% to 46% in one year, despite a high adoption rate of 84%.

Google addresses this trust conflict not just with observations. The company introduced the DORA AI Capabilities Model, a framework that outlines seven practices to harness the value of AI without taking risks. These include user-centered design, clear communication protocols, and strategies referred to by Google as "small batch workflows" to avoid uncontrolled AI activities without supervision.

The report also describes team archetypes from "harmonic top performers" to groups stuck in a "legacy bottleneck." Teams with strong existing processes were able to build on their strengths through AI, while fragmented organizations saw their weaknesses exposed.

The full report on AI-assisted software development and the accompanying documentation on the DORA AI Capabilities Model are accessible through Google Cloud's research portal. The materials offer concrete guidance for teams wishing to integrate their AI technologies more proactively—provided they have enough confidence to implement them.

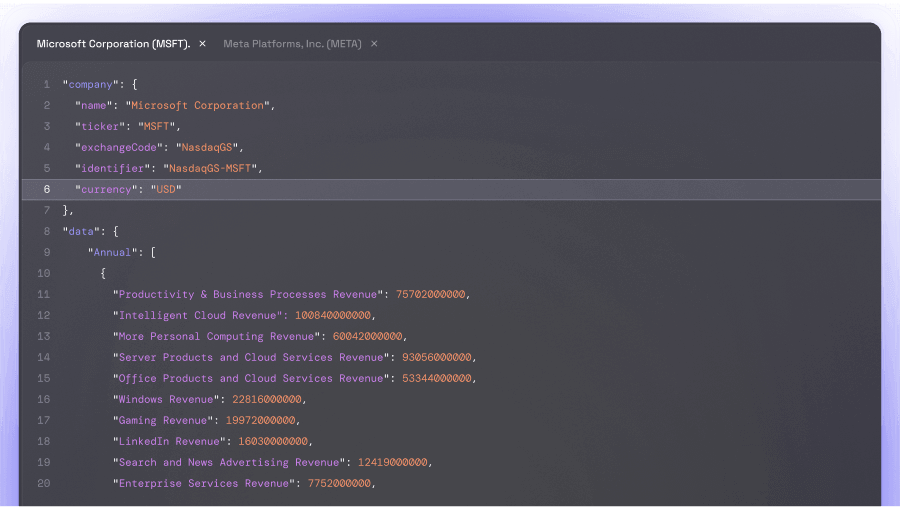

Eulerpool Markets

Finance Markets

New ReleaseEnterprise Grade

Institutional

Financial Data

Access comprehensive financial data with unmatched coverage and precision. Trusted by the world's leading financial institutions.

- 10M+ securities worldwide

- 100K+ daily updates

- 50-year historical data

- Comprehensive ESG metrics

Save up to 68%

vs. legacy vendors